Too Slow

Developing software takes longer than we want.

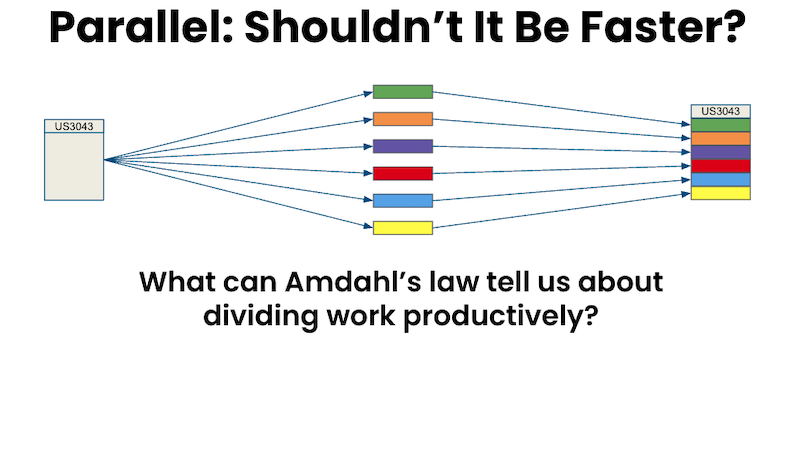

In hopes of getting work done sooner, teams (or their managers) divide jobs into tasks that can be performed asynchronously1.

The assumption that dividing up is faster is seldom challenged. It’s really a matter of faith rather than measurement. It is a familiar habit and the ticket systems support doing it this way, so it’s comfortable and familair.

Teams have been consistently losing the parallel game for years but never realized it because they never measured or had any way to reason about time being saved or lost; it is a matter of faith and familiary.

Sometimes it works incredibly well, though.

Is this random?

Is it luck?

Does it only work when the people are hard-core and good enough?

Is there some rational, logical reason why it’s only sometimes successful?

Amdahl’s Law

Gene Amdahl studied parallelization extensively. He was a computer scientist who worked with mainframe computers at IBM and later his own companies, including Amdahl Corportation (who made supercomputers).

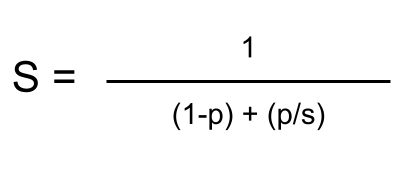

He gave us a useful observation, represented as this equation:

- S is the speedup you can expect

- (p) the part of the process that can be sped up by working in parallel

- (1-p) is the part of the process that cannot be made parallel

- (s) is the factor of the speedup from going parallel

This might seem a little elementary on the surface of it - you have the process that’s not working parallel plus the parallel part. Hardly brilliant and hardly shocking at first glance.

But hold on a minute. There is more to think about.

Provided that the part of the process we want to speed up (p) is a critical area for performance, and that we have a meaningful speedup factor (s), and that we don’t have significant complications to the rest of the process (1-p part), we can see a measurable speedup by working in parallel.

One would assume that splitting our team members up, each taking a part of the program, and all working in parallel would be faster and result in software that we can deliver sooner.

That is not always how it happens, though. Let’s explore…

Maybe it’s not parallel?

The parallel part of the process begins when we launch the parallel processors, or in our case when we assign the parts of the work to our developers.

It ends when the last of the parallel parts complete.

In most software development organizations, especially the ones struggling with slow delivery, the work is not parallel, only asynchronous. The two are not the same.

Asynchronous work isn’t necessarily parallel.

This is examined in more detail in the blog post Scatter-Gather also on this site.

If any parts of the divided job are delayed or interrupted, that delay is included and extends the “parallel part” of the process.

What if the individuals are on different teams (perhaps a front-end and a back-end team) and each of those teams has different priorities? You can imagine if team A starts the work right away and finishes their big, but team B has more pressing concerns and doesn’t start their portion for a few weeks. This asynchrony is not parallel, so the overall time to completion is worsened.

The individuals don’t have to be on different teams for parallelism to be broken. It often happens that individuals have personal work queues, making it unlikely that their asynchronous tasks will coincide.

The higher the individual utilization, the more likely at least one “parallel” task will queue and wait, swallowing up any speed gains.

Consider a typical software development process where there are quality gates that may cause work to flow back to developers. If the work returns, then the task’s time expands. Backflow of work is a primary source of unpredictability and in this case it can consume any intended time savings.

What is the pass-rate, or First-Time Through rate, for your quality pipeline?2 What is the turn-around time from when the work is submitted the first time until it is ready for integration?

For any of the above reasons (or dozens of others) the time from assignment until the final completion of the final part may stretch out in time and consume any advantage we may have gained from allegedly-parallelizing the tasks.

The Non-parallel Part

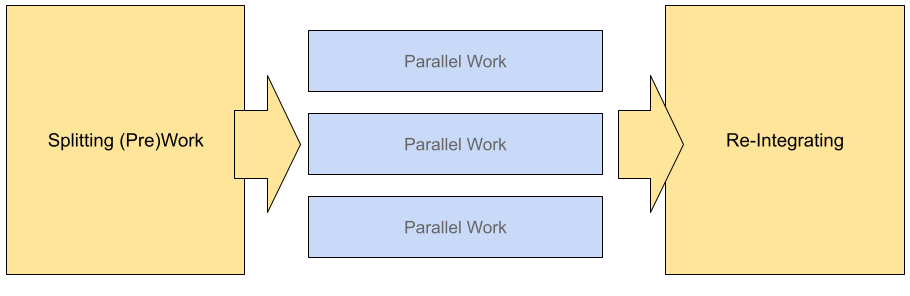

The equation treats the non-parallel part (1-p) as a single value, so one might not see that it includes extra work that preceeds and follows the parallel work.

In order to divide work up among developers, we have to have a pretty solid plan in place. The plan must be complete enough to ensure that these independently-built parts will all fit together at the end of the process.

Prework involves getting a design that is complete enough to be portioned, breaking it into sufficiently complete tasks to be done in parallel, assigning the work to be done3, and then (later) inspecting, assembling, and testing the finished work.

It may also involve marshalling or scheduling all the people who are to do the work, who may otherwise not be available to do it in parallel.

If the time for prework plus the time for parallel work plus the time to integrate and finish the work takes at least as long as it would have taken the available staff to do the work synchronously, then there is no speed advantage.

This happens more often than one might think.

Managing Parallel Work Is Harder

When work is divided, someone has to track it to make sure the prework is done, the parallel tasks are all being completed well, and that the integration is progression well at the end.

These steps require additional effort from the managers of the work, partly because the status of the job is fragmented and distributed.

It’s also a larger management burden because certain conditions must be met before the work can be reliably assigned for parallel development (cf “Definition of Ready”) and integration cannot be completed until all the parallel parts have finished.

Hopefully all the individual pieces will integrate cleanly and easily4.

But also, be aware that sometimes there may be dependencies between the jobs that would otherwise be parallel. Watching for these, resolving them quickly, and preventing them through clever design requires qttention and skill.

How Can We Win This?

We need only a few conditions:

- A low-cost, low-ceremony way to divide work

- We need a low-cost way to integrate

- We need to ensure the work is actually done in parallel

It is hard for someone outside the development team to ensure these conditions are met. It’s not impossible, but I’ve seen it fail and degrade to asynchrony and extra work more often than not.

When it works, it works a treat!

Within the team it’s easier to make it work.

It helps if team has limited work in progress (WIP), and that the work to be done is assigned to the team as a whole rather than parts of the work being assigned to individuals.

Success is likely with front-end, back-end, UX, data services, reliability, and cloud architecture all focused on the same feature at the same time. This can happen even without pair programming or teaming.

The trick is that they are communicating, adapting, and integrating all simultaneously5.

When integration is continuous, there is little non-paralellizable work at the end of the period. This is possible when teams work in a Trunk-based way, or at least share the same branch among all the people working on a new feature.

It is a low-ceremony, high-energy way of working that provides quick results with lower incident of integration error or escaped defects.

You can win this game. Amdahl’s equation gives you all the information you need if you can apply it.

Where it is hard to win, or rare to win, perhaps it is best to work in a simple synchronous way and complete one task at a time.

Is this really a problem?

If you aren’t accounting for the additional non-parallel time and asynchrony, it is likely that you have been losing the parallel game.

A lot of people don’t consider the physics of the situation (as described by Amdahl’s law) and blindly assume that asynchronous work with late integration is the most efficient and speedy way to work. They lose this game continuously, but don’t realize that they’re losing.

There are definite engineering tradeoffs to be considered.

Is your team too slow and unpredictable even though you are doing the maximum amount of work in parallel, or because you are doing the work asynchronously with extra overhead?

That explains it!

Please consider the information here:

You may want to contact us for further training or coaching for Ways of Work that are more likely to give you the outcomes you want and need.

No, this isn’t part of our problem…

If you are not suffering the consequences of ill-considered paralellization you may find there are ways to improve your process by focusing on creating quality with superior desk disciplines. Consider our Industrial Logic Ways of Work, including:

There are many ways to focus improvement on having higher overall throughput and satisfying the DORA metrics. We can discuss these with you, and help you make better software, sooner.

Footnotes

-

Breaking up work this way is often erroneously described as “divide and conquer”. ↩

-

The pipeline’s pass rate is the product of individual gates’ pass rate. ↩

-

Often the person assigning the work goes to the extra work of dividing the work so that each task fits a specific person’s skill set (for efficiency’s sake). ↩

-

It is unlikely, since fragmented work is done by different people at different times, in different branches. ↩

-

I’ve observed that software development is done most well and most quickly when we focus all of the relevant skills on the task at hand. ↩