There has been a lot of noise about the CrowdStrike outage, and rightly so, with many widely-used services suddenly unavailable and devices shutting down while in use. It has been an expensive disruption, estimated recently at USD$5.4B.

Many thought-provoking materials were published such as those produced by my friend Gitte Klitgaard, or Dave Farley’s thoughtful video. Explanations and expositions are arriving online by the hour as I write this (July 25, 2024). I will try not to repeat all their information here.

The mustachioed, dark-hatted villain behind this catastrophe?

It was a bad data file, which triggered a defect.

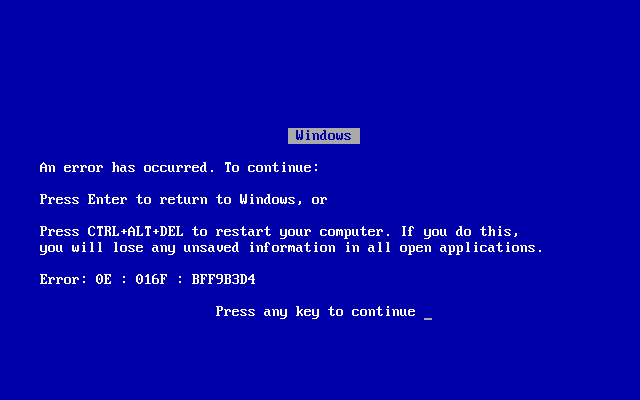

The “Channel 291” file had a problem, which triggered a longstanding software defect, which became quite serious because the Falcon sensor runs (and is certified to run) as a part of the Windows kernel. It caused systems to fail with a “Blue Screen of Death”.

As of this writing, it’s not even believed to be a recently-created bug. It’s been sitting there, like a landmine, waiting to be tripped for years.

It’s tempting to say “Some bad people caused it, so it’s a special circumstance. It couldn’t happen here.” After all, CrowdStrike’s Falcon Sensor product has already had a similar problem on their Debian Linux version, with less damage and upset. It has also been noted that the same executive who leads CrowdStrike today also led MacAfee during a disturbingly similar outage over a decade ago.

That’s a false comfort.

The next big outage might involve some other crucial bit of infrastructure - a network manager, a cloud orchestrator, a virtual machine, a service container, or a common library. It may not involve the same individuals.

So what do we do about it?

Do we need better programmers?

Our industry always has and always will need more and better programmers.

Sadly, many companies only consume programming skills, and do little to help development staff improve them.

Our field changes too quickly for businesses to be consumers only. We can’t treat developers as if they emerged from academia fully formed, ready for tomorrow’s challenges. If we need better programmers, we will have to grow them.

If we want our teams to do great work consistently, we need to get past the idea that “you should already know everything” and “learning is what you do before you are hired” and move to an atmosphere of continuous learning. - Industrial Logic Blog from 2016

The most obvious answer is to provide training courses to allow developers, managers, and executives to raise their proficiency.

We can conduct boot camps and workshops, delivering skills necessary to sustain a safer, nimbler, more frugal way of working.

We can provide ongoing coaching and Real Work Workshops where businesses cannot halt production to engage in classroom learning.

And, of course, we can provide player/coaches to work directly in teams, sharing and spreading their competencies while materially participating in software delivery.

Inside our company, we grow our skills through ensemble programming. The best mechanism we’ve found for developing team skills without halting production is simply working in groups.

Ensemble learning is a powerful mechanism. We’re frequently told that people learn more in a few months of ensemble work than in years of solo work.

But will this ensure we are safe from a CrowdStrike-like crisis?

Well-trained developers are better at spotting flaws in their code.

Teams of trained individuals working collaboratively are even more likely to spot and stop defects.

Training may provide a crucial part of the solution and better skill training may have led developers to avoid this problem entirely.

Do we need more testing?

Everyone does, because there is a tradeoff between how comprehensive and how affordable our testing efforts can be.

Manual testing is less repeatable, slower, and more expensive than automated tests.

Automated tests are inexpensive to run and have deterministic results, but are devoid of intuition, creativity, and taste. They will not spot some problems that a human tester might find obvious.

We find it’s important to automate repetitive and deterministic tests and use human beings to find issues with flow, consistency, and user experience (note: testing professionals will find correctness problems too).

A testing script can tell you your code is still working, but can’t tell you that using it sucks.

We use Test-Driven Development (TDD) to modify our software design quickly and safely. TDD is a development discipline rather than a testing discipline, yet it creates fast-running test suites as a side effect.

A few years ago, my coding partner and I recognized that we sometimes needed to hand-edit data files. It wasn’t long until we wrote a data validator for the files. We also wrote tests to run our file parser with ill-formed files. This way, we can’t easily publish bad files, and we won’t fail catastrophically if someone modifies the files poorly.

It is a fairly normal thing for a pair of programmers who use TDD to do.

Perhaps even more crucially, we use our test suites as part of the software development work, in the minute-by-minute work of coding, not only after a lot of work has been integrated.

This is the basis of continuous integration and continuous delivery.

Although continuous delivery to customers is not available to CrowdStrike (their code has to go through MS certification before being released), perhaps having better and more frequent testing internally (continuously deployed, but not continuously delivered) would have helped.

Would these tests have spotted CrowdStrike’s issue?

Probably. Tests like the ones we routinely write could have prevented such a bug from being released.

There is more to reliability engineering than testing, but it’s hard to imagine reliability with poor testing.

Is the deployment model wrong?

Probably.

There is a technique most modern companies use, which may be called incremental delivery, or canary releases, or sometimes incremental rollout.

With incremental delivery, a new change is rolled out to maybe 1% of the customer base, generally customers with an amenable risk profile. Over time, the delivery goes out to more customers until ultimately it reaches the entire customer base.

A significant problem tends to manifest early in the release process, limiting the exposure and damage that a defect can create.

If CrowdStrike was attempting to address a serious, imminent threat they may have intentionally skipped incremental release. I’ve not heard that this is the case, but it is possible that they took some shortcuts.

It seems likely that incremental delivery would have helped manage the damage from this release.

Indeed, today’s news tells us that CrowdStrike is adjusting its policy and will be performing canary releases in the future.

Do we need mechanisms for faster recovery?

Almost certainly,

CrowdStrike did release instructions for recovering affected machines pretty rapidly after the problem was made known. The recovery process is to reboot into “safe mode” and delete the channel 291 file, then reboot normally.

The process takes seconds to minutes per machine, but the problem is that it’s a manual procedure and there are millions of machines to remedy. It’s an expensive and time-consuming proposition.

If we have good observability and incremental release practices, we can recognize and respond to problems more quickly.

If the codebase is well-maintained, it is easier to write tests that isolate and recreate the problem, which makes it easier to create and prove a solution.

This is why we focus so much on code craft - we may need the code to be obvious and workable at the most inconvenient times!

If a company has a convoluted process with too much Work In Progress, it will be difficult, disruptive, and risky to deploy a correction.

We work in such a way that deploying changes is immediate and routine. When a defect slips through our development teams and manages to evade our safety net of tests, it’s easy and routine to roll out a fix. It’s easier and more convenient to roll out changes when the software dos not run as part and OS kernel!

The combination of techniques we’ve listed greatly reduce the chances of inconveniencing our customers and also limit the damage that a bad change might induce.

So how did CrowdStrike go wrong?

We just don’t know what process was being followed (or not followed) by the developers at CrowdStrike. We do not know how this problem managed to slip past all their defenses.

Without that knowledge, it’s hard to offer a certain solution.

Cybersecurity is a complex field that changes rapidly in response to threats. It is a high-speed “arms race” against people who are motivated to profit at others expense, anonymously, and from a distance.

I don’t know how subtle and complex the defect was that led to this well-publicized outage. I don’t know what shape the code is in. All I know was that this ill-formed data file managed to slip past their defenses this time.

We will eventually hear how they close the door on this kind of problem. We will have to watch and learn.

I hope this results in blameless retrospectives and systemic changes, rather than a performative scapegoating. I hope that they don’t keep their solution a secret.

By the way, as a brief warning to those who say “Nobody cares about your internal technical practices,” let me offer this:

Could processes and techniques like ours prevent similar failures?

We think it is likely.

The solutions they’re putting in place look like a subset of the work we have already been doing for years.

We know that our way of working has greatly improved delivery times and reduced defect counts for many thousands of developers by increasing their technical safety and managing risks more effectively.

This is all part of our mission to help companies deliver better software sooner.

Those are areas where we lean in.