Series index

What Makes Integration Hard?

Software systems often need changes.

Sometimes, the goal is to reduce runtime costs. In other cases, the focus is on improving performance. Changes are frequently made to add new features or capabilities. Changes are needed to correct defects or inadequacies within the system.

A developer (or team) starts with an approved, stable version of the system’s source code. They create a set of changes (a “changeset”) to introduce new functionality or improve software behavior.

Any codebase will have many interacting constraints and rules. Much of the art of software development involves making new changes while remaining compatible with existing functionality.

Team A is working on a change and started with version A of the main development line, which works fine as an isolated change.

Team B is working on a change also based on version A of the main code line, and their change also works fine.

The problem shows up much later. While each change works independently, the two don’t work together.

As long as changes are isolated, the developers are unaware of the defects they injected.

When an integration issue (an incompatible set of changes) surfaces, the developers must identify the underlying issues, locate the affected regions of code, and make the necessary design changes.

This rework usually falls to the team whose code changes were merged last. The first team’s changes integrate fine. This often makes the earlier-merged team seem superior to the teams whose code was equally valid but whose changeset was added just before the error was spotted.

When blame is given, it’s the “luck of the draw” who receives the blame. “It was fine before we added your code” doesn’t mean that the team merged last did a poor job. This is counterintuitive but worth considering. If the merges had happened in the opposite order, the other team would seem to be at fault.

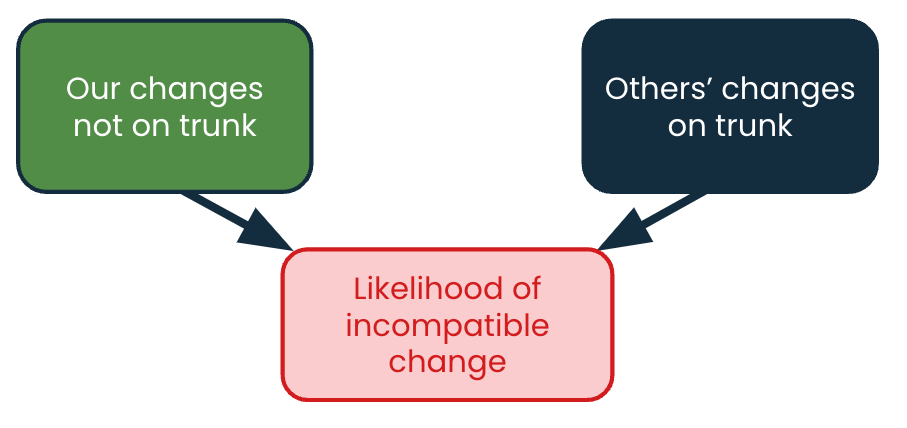

The problem is that neither team could tell that their changes would not mesh with the other team’s changes.

Large changesets are not only more likely to hide incompatibilities, but they are also harder to diagnose and repair.

The larger the changesets are, the greater the risk of divergence and the more complicated the remediation may be.

If incompatibilities go unnoticed until late in the process, you can expect:

- delayed releases

- missed release schedules

- flawed releases that must be rolled back

- error reports from customers

These are common problems in corporate software development arising from the same underlying cause.

These issues don’t occur in single-developer projects or when a small, tight team works on a greenfield project. You simply don’t encounter these problems when only one group of people works on one codebase with a single changeset at a time.

People from small, nimble startups who join their first large corporate development team are unprepared for problems of scale. The same is true for university graduates who have mostly worked solo on class assignments or in small groups.

Integration problems are problems of scale.

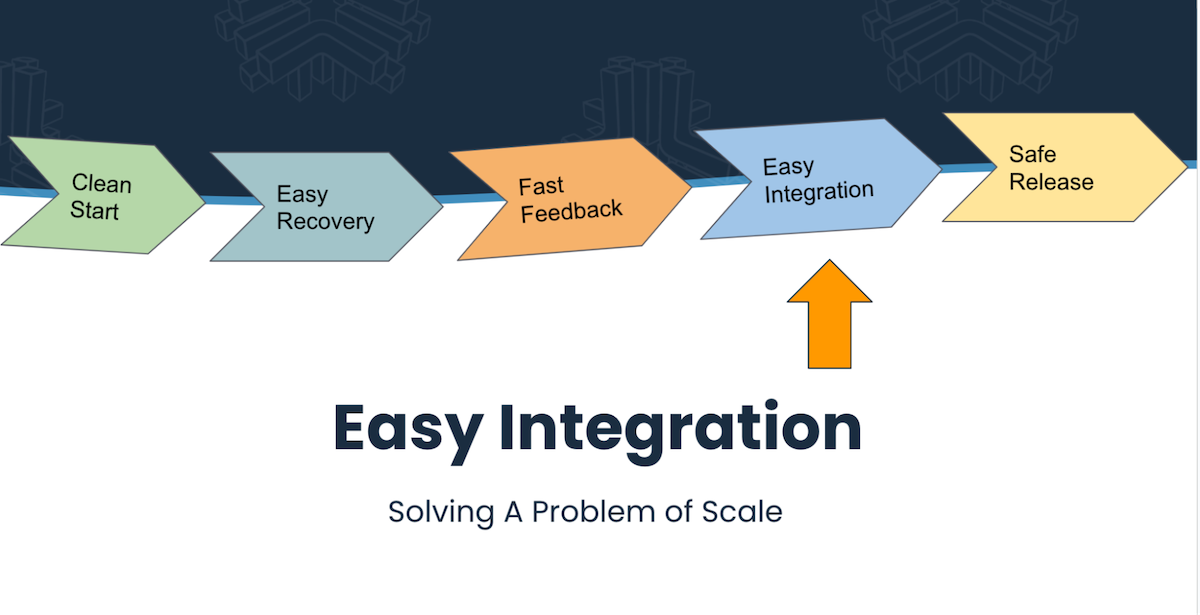

Significant factors for these integration problems are:

- The number of parallel changes

- The size of each changeset

- Deferring discovery of issues until it’s too late to recover gracefully

How To Reduce Difficulty

Limit The Number of Parallel Activities

Sharing a codebase across many teams requires finesses and solid product knowledge, but several time-honored approaches are used with some success.

- Only do one thing to a product at a time. This is hard to do at scale, because organization grow primarily to achieve more than one thing at a time. If you can do only one thing at a time in one codebase, you eliminate all the chances for collision.

- Parallel single new feature work with fixes. Defects are (by nature) identified in existing code, whereas new features tend to involve creating new code. There may be some overlap, but it is minimized. Separating work this way may run counter to business priorities when the focus is on new features, and there is still a risk that some oversized core modules will receive conflicting changes.

- Ensure that parallel features affect different areas of the codebase. Doing this requires intimate knowledge of the source code since things that seem unrelated in the eyes of users (and non-technical managers) may connect tightly in the codebase. This also may run counter to business priorities by delaying highly desired but risky, changes.

- Limit radial changes while implementing a feature can minimize merge collisions but also leaves the code with accumulated neglect (often called technical debt). There must be a follow-on task to return to the code and rationalize the design. This technique trades one set of technical risks for another, so be sure that you are solving the more troubling issue.

- More modularity of design and the use of interfaces can help to make parts of the system independently modifiable. This is one of the useful features of Hexagonal Architecture.

- While I have no significant experience in Event Driven Architecture, I am often told that it solves a lot of problems via modularity and repeatability, so that one can replace entire services without upset, and can run old versions of an app in parallel with new ones to judge readiness for switch-over.

Avoiding incompatible changesets is an attractive idea, but hard to achieve for management. It requires intimate knowledge of the code and a commitment to rationalizing the design after adding features.

I wouldn’t rely on trying to avoid the issue since doing so lacks safety.

Once a collision or incompatibility sneaks into the changeset, if you spot it, you pay the full price to remedy the situation.

Let’s see what else we can do either in addition or instead of avoiding the problem.

Reduce Period of Isolated Code Development

If we do less work between integrations, we have smaller changesets with a lower chance of collision. Less code also means less effort to isolate and correct a fault.

We can have smaller changesets if we break our features into smaller sub-features, each one a fully working subset of the planned, final result.

Only one small change per changeset makes each changeset small, and ensures they are completed more often.

This will mean more integrations, but each will be simpler.

We use many story-splitting techniques such as Role-Action-Context exercises, Use Case Mapping, Story Mapping, and Example Mapping. The specific technique you choose is less important than the act of splitting a feature into a series of fully-working “value drops” or “slices.”

This is iterative and incremental development.

The increment must be fully working, even if the feature implementation is not comprehensive. It is best if it has a suite of tests to ensure that it is fully working and that some other feature doesn’t “break” its functionality.

The next increment may broaden the functionality of that feature. In this way, the feature is “grown” in a series of releases, rather than “constructed” in a single pass. This means that we may be integrating just a handful of lines of code at a time, all tightly focused on specific behaviors in the code.

The small changesets and tight focus on a narrow bit functionality reduces the opportunity for collisions. It also reduces the cost of collisions.

We often see teams with smaller changesets completing incremental changes many times a day or at least several times per week.

Find Problems Sooner

We don’t want to find out about large, incompatible changesets on the last day before a release.

The longer we hold changesets in isolation (for instance, in git branches or on someone else’s machine), the longer it takes to discover incompatibilities.

This is because when we merge two branches, we produce a new version of the product’s source code; one that has never existed or been tested. There was no opportunity to discover the problem early and have a solution already in hand.

The term “continuous integration” (CI) is described and discussed at the C2 wiki:

What if engineers didn’t hold on to modules for more than a moment? What if they made their (correct) change, and presto! everyone’s computer instantly had that version of the module? You wouldn’t ever have Integration hell because the system would always be integrated. […] there wouldn’t be any conflicts to worry about.

Since this work is aided by a service that automatically rebuilds and re-tests (well, runs the automated checks and scans at least) when changes are made, people often think of those build-and-test servers as “CI Servers.”

CI is not the act of running a build-and-test server. CI is a developer behavior, not a technical appliance.

A CI server itself will usually fail if the code cannot be compiled or if a scanner reports a seriously ill-formed bit of code, but the server doesn’t know if the compiled code actually works and can carry out useful tasks.

We need fast, automated tests that can run in the CI server and flag issues as they occur.

This suite of tests needs to match the current functionality of the program, growing as the codebase grows.

We need some kind of continuous testing and continuous development of automated tests.

By the way, if you have achieved TBD and CI, there is little reason to not go all the way to Continuous Deployment (to a machine) or even Continuous Delivery (to production).

Is This Asking Too Much?

When one is accustomed to working with a small team in a greenfield project, this all seems overblown and inconvenient.

Without doing something to mitigate these problems, your large projects will suffer from unpredictability, errors, delays, defects, and rolled-back releases.

Does this happen? All the time.

Do they still release software? Yes, but releasing is full of problems.

These problems are inherent in the system of work.

If the system remains unchanged, then other attempts to mitigate the problem (like increasing the reporting burden or replacing programmers or managers) will make little positive difference and may worsen the situation.

If you change the system, you don’t have the same problems.

Do We Have To Work This Way?

You are doing the right thing if you can address these three problems:

- Likelihood of incompatible changes

- Size of incompatible changesets

- Late identification of problems

If you are not having any problems with incompatible changes, late failures, merge collisions, or surprising errors, then whatever you are doing is working.

If you keep having these problems and don’t want to fix them, then that’s your choice, but maybe looking at the picture from this point of view may help you understand what your people are going through.

I wish you well regardless.